Web Scraping - COVID-19 Data

Web scraping is the (generally automatic) process of collecting semi-structured data from the web, filtering and storing it, and then using it in another process.

Table of Contents

- Motivation

- Process

- Data

- Dependencies

- Run Bot

- DataViz

- Documentation

- Contributing and Feedback

- Author

- License

Motivation

Create a web scraper bot to obtain data on confirmed cases and deaths of COVID-19, in order to analyze them.

Process

- Run the Web Scraper with Selenium to obtain the historical data. It only runs 1 time.

- Run the Web Scraper with BeautifulSoup to obtain daily data (every day every x hours).

- Export the historical daily data in a CSV file, to feed the dashboard in Power BI.

- Use the COVID-19 dashboard (built in Power BI) to analyze data and find insights.

Data

The data obtained through web scraping are:

| Variable | Description |

|---|---|

| country | Country name |

| total_cases | Total number of cases |

| total_deaths | Total number of deaths |

| total_recovered | Total number of people recovered |

| active_cases | Number of active cases |

| serious_critical | Number of critical cases |

| total_tests | Total number of tests |

| tot_cases_1m_pop | Number of cases per one million population |

| deaths_1m_pop | Number of deaths per one million population |

| tests_1m_pop | Number of tests per one million population |

| datestamp | Data timestamp with UTC-5 time zone |

Furthermore, to carry out the complete data analysis and its respective visualization, other variables had to be derived, such as:

| Variable | Description | Definition |

|---|---|---|

| perc_deaths | Percentage of deaths | total_deaths * 100 / total_cases |

| perc_infection | Percentage of infections or contagions | total_cases * 100 / total_tests |

| new_total_cases | New daily cases | total_cases_today - total_cases_yest |

| new_total_deaths | New daily deaths | total_deaths_today - total_deaths_yest |

| new_active_cases | New daily active cases | active_cases_today - active_cases_yest |

You can find the scripts with which the tables were created in SQL Server here.

World COVID-19 data was collected over 253 days. The latest data reported by country can be seen at the following link

Below, some final statistics of the data updated until September 30 UTC+0:

| Variable | Value |

|---|---|

| Final Date | 9/30/2020 |

| Countries infected | 213 |

| Total Cases | 34,134,840 |

| Total Deaths | 1,018,033 |

| Active Cases | 6,587,728 |

| Total Tests | 648,926,831 |

Analysis

- PCA Data Analysis

- Curve Similarity Analysis - Cases

- Curve Similarity Analysis - Deaths

- Similarity of the Curve Slopes

Dependencies

The project was carried out with the latest version of Anaconda on Windows.

If the main Web Scraping libraries do not come with the selected Anaconda distribution, you can install them with the following commands:

conda install -c anaconda pyodbc

conda install -c anaconda beautifulsoup4

conda install -c conda-forge selenium

The specific Python 3.7.x libraries used are:

# Import custom libraries

import util_lib as ul

# Import util libraries

import logging

import pytz

from pytz import timezone

from datetime import datetime

# Email libraries

import smtplib

import ssl

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

# Database libraries

import pyodbc

# Import Web Scraping libraries

from urllib.request import urlopen

from urllib.request import Request

from urllib.error import HTTPError

from urllib.error import URLError

from bs4 import BeautifulSoup

# Import Web Scraping 2 libraries

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

Note: In order to use the web scraper that fetches historical data, you may need to download the Chrome driver that uses the Selenium library and put it in the driver folder.

Run Bot

There are several ways to run this web scraper bot on Windows:

- Type the following commands (below) at the Anaconda Prompt.

cd "WebScraping_Covid19\code\"

python web_scraper.py

- Type the following commands (below) at the Windows Command Prompt. Previously, Anaconda Python paths must be added to: Environment Variables -> User Variables.

cd "WebScraping_Covid19\code\"

conda activate base

python web_scraper.py

- Directly run the batch file run-win.bat (found in the run/ folder).

Automate Execution

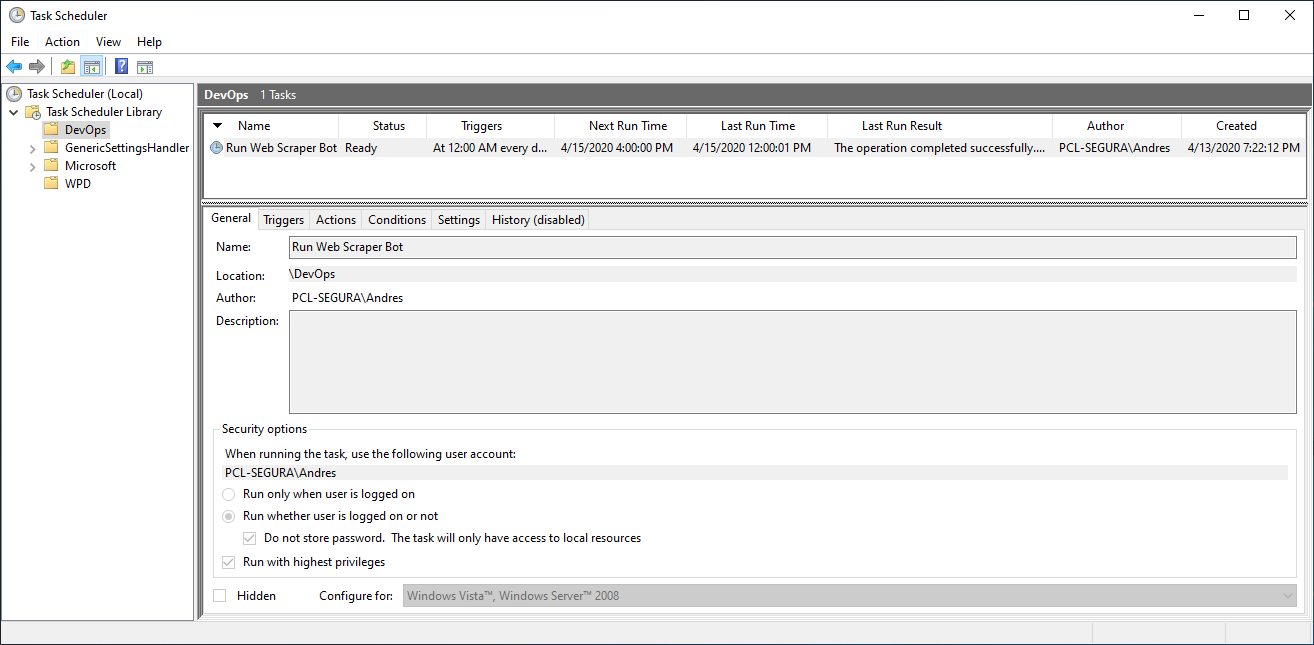

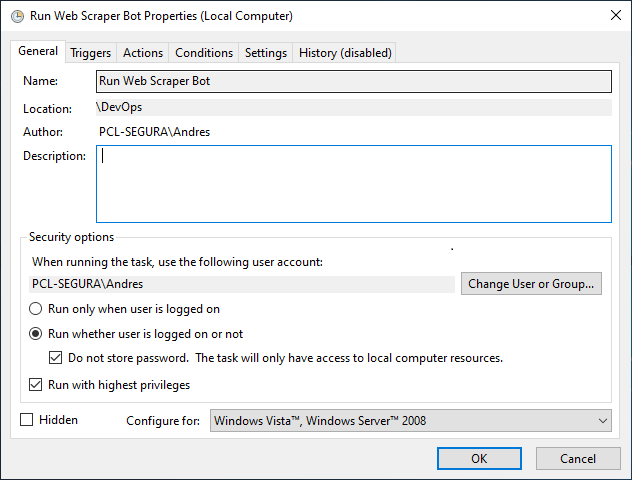

In order to automate the process, a Task can be created in the Windows Task Scheduler, to configure the execution of the web scraper bot every x hours.

- Create a new Task in Windows Task Scheduler.

- Set up a Trigger that runs the task every day every x hours.

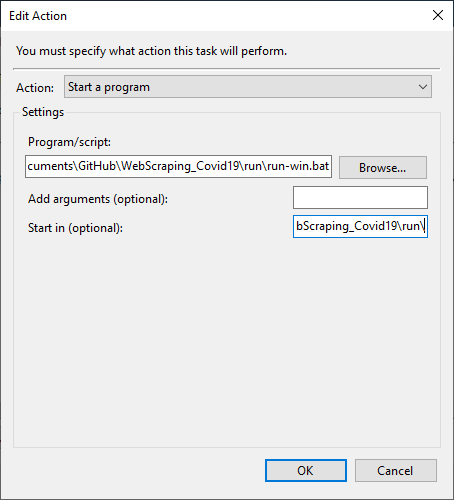

- In the Action tab, select the .bat file and the folder from where the Task will be executed.

DataViz

Next, the COVID-19 dashboard that was created to visually analyze the collected data.

Documentation

Below, some useful and relevant links to this project:

- Create and Populate Date Dimension

- Python Web Scraping Tutorials

- 10 Web Scraping Tools (Spanish)

- Run Anaconda Python in CMD

- Schedule a Batch file to run Automatically

- Get information about countries via a RESTful API

- Sending Emails With Python

Contributing and Feedback

Any kind of feedback/criticism would be greatly appreciated (algorithm design, documentation, improvement ideas, spelling mistakes, etc…).

Author

- Created by Andrés Segura Tinoco

- Created on Apr 10, 2020

License

This project is licensed under the terms of the MIT license.